We have all seen the headlines: AI agents promise to change everything.

But, here’s the reality, from someone who’s been in the enterprise trenches since the early days of web transformation to the mad dash to the cloud, and now AI agents. Most companies can’t see control, or govern the AI that’s already running inside their IT environment.

This is the big problem only a few people are talking about:

AI agents are the first generation of enterprise technology to actively bypass the governance systems that we built our careers on.

This blog is about giving a name to that problem - the “Invisible Layer” - and explaining why it’s growing fast, right under IT’s nose. I don’t have all the solutions yet, but getting clear on the problem is step one for the decade ahead.

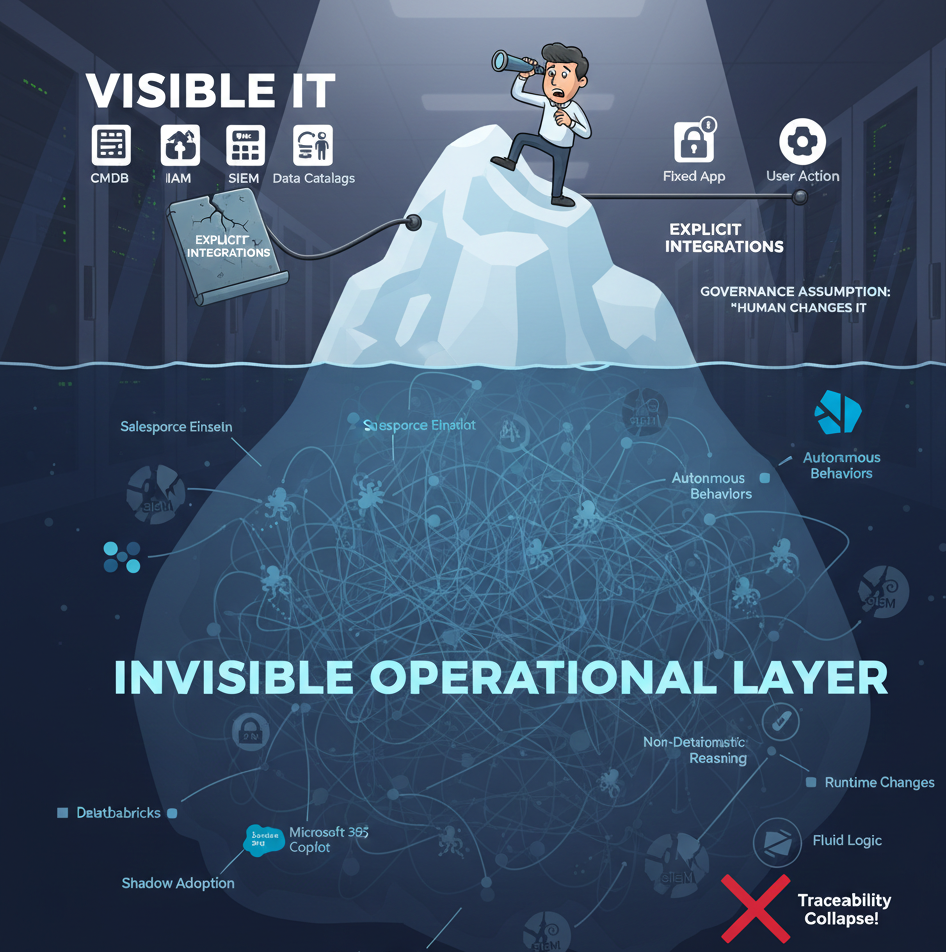

1. The Birth, Growth & Depth of the Invisible Layer

AI agents are a new class of applications that think, reason, adapt, and act.

They don’t follow fixed logic. They generate it.

Birth

Traditional apps are deterministic. AI breaks that model. AI agents plan, reason, choose tools, retrieve knowledge, and evolve with context.

Examples:

-

A developer uses GitHub Copilot and the assistant decides to modify multiple files, add tests, and restructure code without being explicitly asked.

-

A product team relies on Notion AI, which rewrites entire knowledge-base sections automatically based on inferred context.

This is the birth of an invisible operational layer that behaves nothing like software IT was designed to govern.

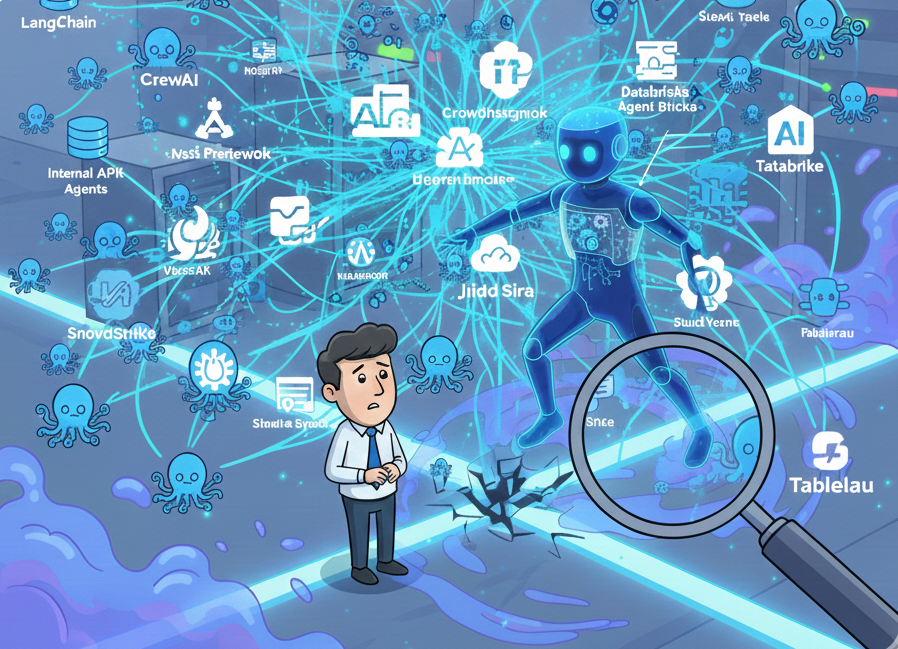

Growth

Developers now build AI agents across a fragmented builder and orchestration ecosystem: LangChain, CrewAI, Microsoft Agent Framework, Databricks Agent Bricks, Vertex AI Agents, MCP servers, and low-code AI orchestrators.

Examples:

-

A security engineer uses CrewAI to build a SOC triage agent that reads CrowdStrike alerts and opens Jira tickets.

-

A data engineer builds a LangChain agent that autogenerates Snowflake SQL and refreshes Tableau dashboards at runtime.

These agents perform real work across data pipelines, SaaS integrations, business workflows, and internal tools - none of which explicitly surface to IT’s purview.

Depth

Meanwhile, SaaS platforms have been quietly shipping AI features by default: productivity suites, CRM platforms, security tools, developer tools, payroll systems, and ticketing systems.

Examples:

-

Salesforce Einstein auto-drafts customer emails and suggests deal insights without IT involvement.

-

Microsoft 365 Copilot rewrites documents, summarizes meetings, and drafts Teams posts - actions that look “normal” but are actually AI-driven.

An AI agent is no longer a single model. It is a network of autonomous behaviors spreading below the surface of enterprise systems.

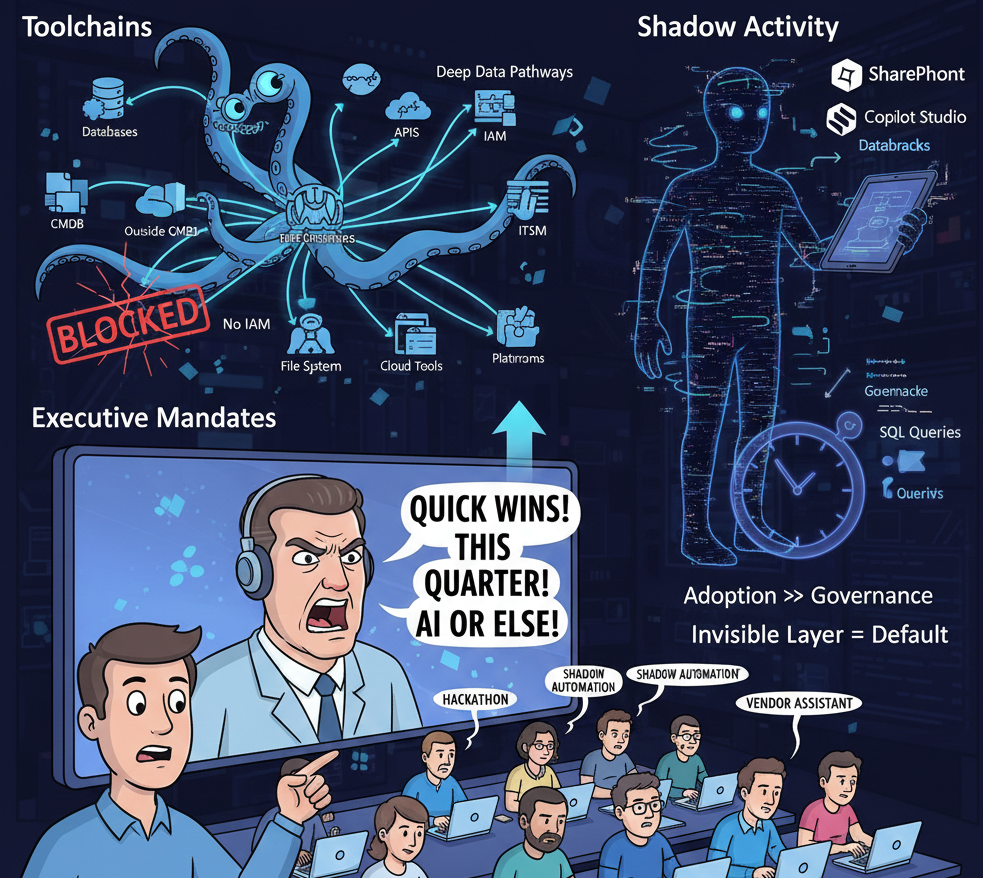

2. Toolchains, Mandates & Shadow Activity Accelerate the Problem

The invisible layer expands because modern AI toolchains prioritize flexibility - not governance.

Toolchains with too much power and deep reach

AI agents now connect directly to databases, internal APIs, file systems, memory systems, cloud tools, and SaaS APIs. They use MCP servers, RAG pipelines, embedding systems, vector databases, and dynamic SQL/HTTP tools.

Examples:

-

A Claude agent uses an MCP server exposing internal HR APIs so employees can ask “What’s our PTO policy?” - pulling live data without IT review.

-

A RAG workflow built by an intern retrieves S3 documents containing sensitive customer contracts to answer “What were our renewal terms last year?”

These toolchains give agents real-time access to sensitive systems but operate fully outside CMDBs, IAM, IGA, SIEM, or ITSM.

Executive pressure drives speed over control

Mandates like “Find quick wins,” “Deliver something this quarter,” and “Your performance will depend on AI use” push teams to launch copilots, RAG workflows, hackathons, shadow automations, and vendor-provided assistants overnight.

Examples:

-

A business unit creates a “deal desk agent” during a hackathon using Replit + Salesforce APIs, which sales quietly starts using in production.

-

Finance builds a quick Vertex AI agent to summarize NetSuite data and send monthly commentary to leadership - no review, huge exposure.

Shadow experiments become production behavior

Third party AI agents appear long before IT hears about them. Models get swapped. Tools get added. Data access widens. The pace of AI adoption exceeds the pace of governance by an order of magnitude.

Examples:

-

A procurement agent built in Microsoft Copilot Studio starts reading vendor contracts from SharePoint, then routing decisions to email workflows.

-

A BI assistant in Databricks begins running queries against Unity Catalog datasets with no lineage or enforced access constraints.

The invisible layer becomes the default execution path for all agentic activities.

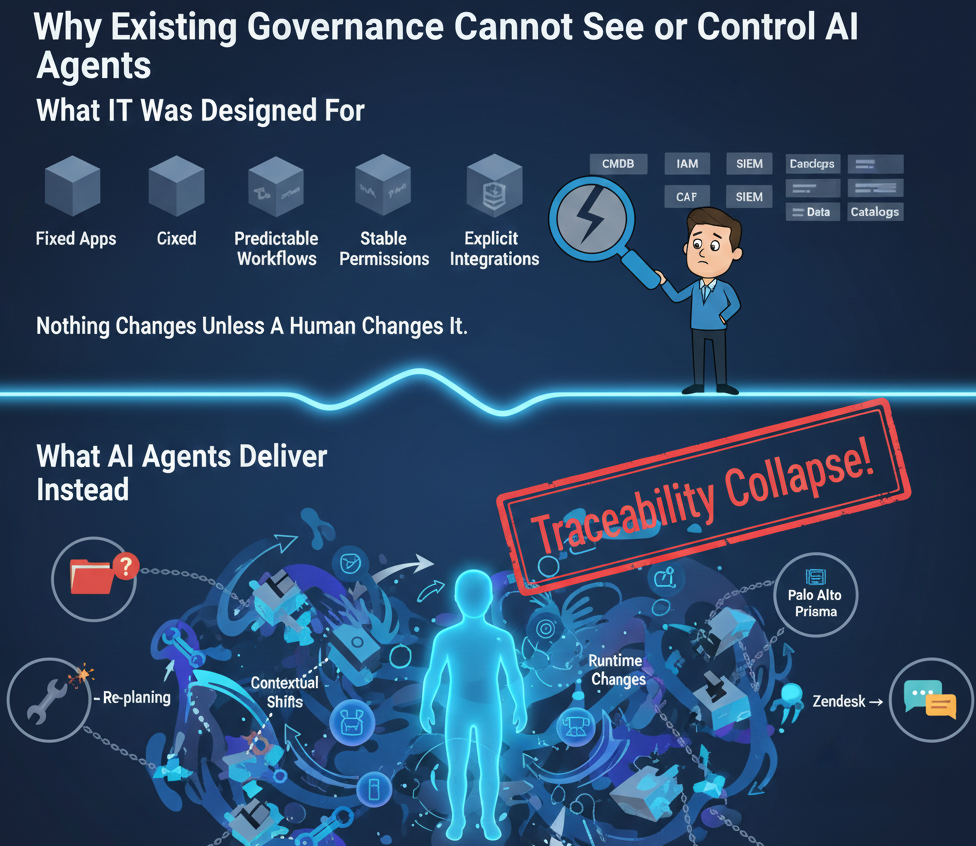

3. Why Existing Governance Cannot See or Control AI agents

Traditional IT governance assumes a stable apps environment.

AI agents deliver fluid, autonomous, adaptive behavior.

What IT was designed for

Fixed apps. Predictable workflows. Stable permissions. Explicit integrations. Deterministic behavior. User-driven actions. Logs that explain the past.

Systems of record - CMDB, IAM, SIEM, and Data Catalogs - assume:

Nothing changes unless a human changes it.

What AI agent delivers instead

AI agents re-plan constantly. Workflows shift with context. Tools and data sources change at runtime. Identities blur (agent-as-user, user-as-agent). Actions can be taken autonomously. Behavior changes run-to-run. Reasoning is non-deterministic.

The result: governance frameworks cannot model AI’s fluid behavior.

The traceability collapse

AI systems can read documents, query production data, call APIs, run SQL, invoke tools, trigger workflows, switch models, and push outputs to downstream systems - yet enterprises cannot answer basic questions.

Examples of traceability failures:

-

A confidential PowerPoint slide resurfaced in a sales deck. Did a user upload it, or did Copilot retrieve it from a restricted SharePoint library?

-

A firewall rule in Palo Alto Prisma changed. Was it a human, a script, or a SOC Agent built in CrewAI?

-

A customer saw internal data in Zendesk. Did a support rep paste it, or did a RAG step pull the wrong document?

-

Sensitive SQL ran on Snowflake. Was it manually executed, or triggered by a LangChain SQLTool inside an agent loop?

-

Code was pushed to GitHub. Was it written by a developer or auto-generated by GitHub Copilot during a reasoning step?

AI behavior is happening outside the observable plane of enterprise systems.

4. Recap: The Invisible Layer Is Growing - Faster Than the Systems Meant to Govern It

To summarize:

-

AI created a new class of dynamic, reasoning applications.

-

Agents are built across fragmented, ungoverned platforms.

-

SaaS tools ship AI without IT operational oversight.

-

AI toolchains expose deep data pathways.

-

Executive mandates accelerate shadow adoption.

-

IT governance is calibrated for static systems, not dynamic agents.

-

Traditional controls cannot model AI’s reasoning-driven behavior.

-

Enterprises cannot see what AI touches, who it acts as, or why it acts.

The invisible layer isn’t some abstract idea. It’s a massive operational blind spot forming right at the heart of our enterprise technology.

Understanding this gap is the first step. What we, as a community, do next is the real discussion that needs to happen.