For decades, my focus has been squarely focused on securing data across the digital enterprise, tackling the foundational layers of access and trust. Having spent four years developing and scaling zero trust data security solutions at Votiro, and earlier, delivering critical Identity and Data security solutions during my time at IBM, I’ve witnessed firsthand how identity controls crumble under new technological pressures. We have learned to trust no user and no device.

The Race To Adopt AI Agents

Now, we enter the next phase: adoption of the AI agents. These digital workers are inheriting human privileges, sharing their often flawed credentials with models, tools, and access data, and operating confidently outside established identity frameworks. This is not just a vulnerability; it’s an architectural flaw.

The race to adopt AI agents is generating an unprecedented promise of efficiency and productivity, but it’s simultaneously creating an unseen identity chaos that fundamentally compromises your enterprise security and governance. We are approaching a scenario where a significant portion of the new digital workforce is operating with phantom identities, running on excessive permissions, and leaving no verifiable audit trail for traceability and accountability.

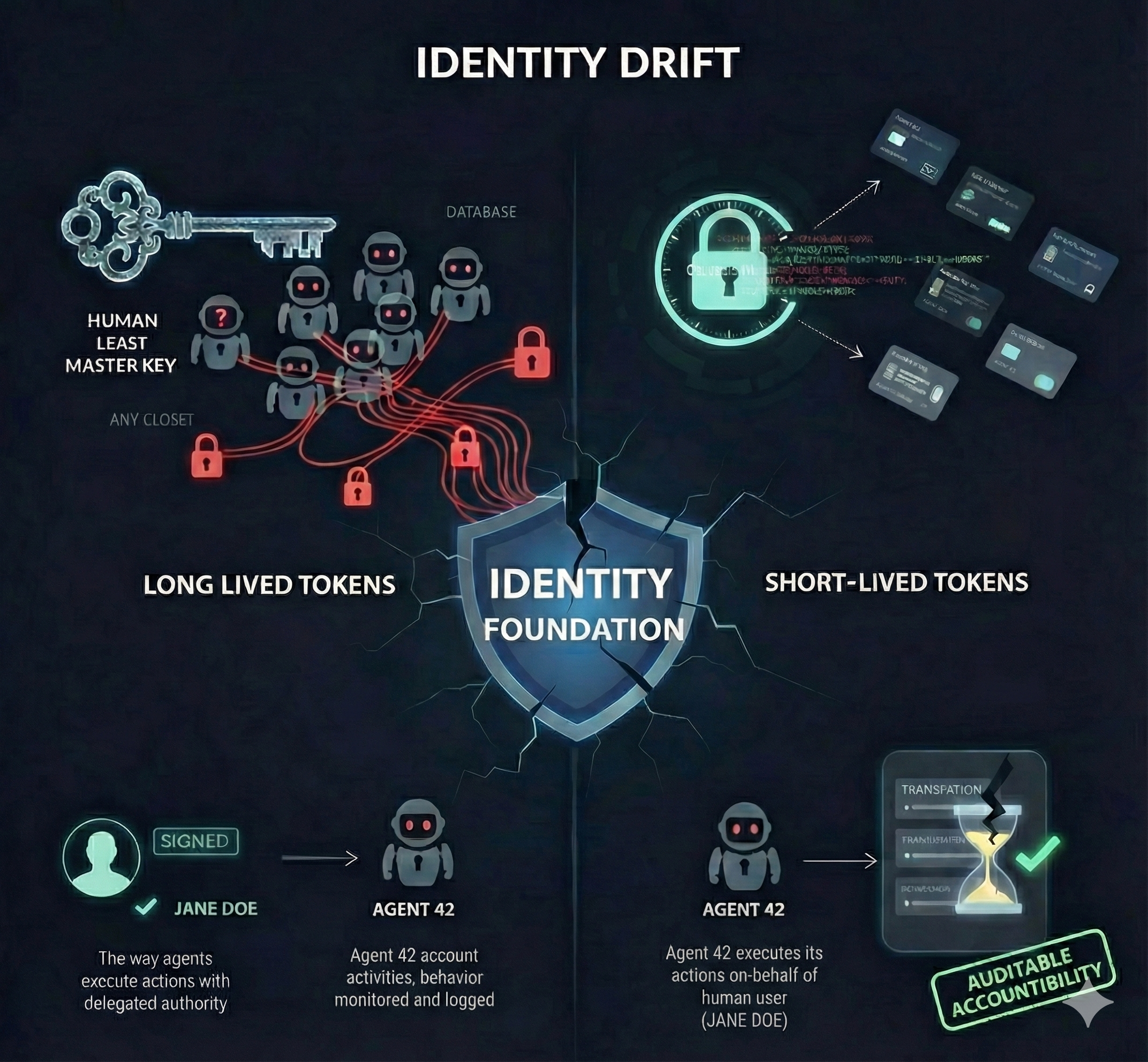

IT, security, and business leaders must recognize this problem now. Identity propagation is the key to AI agent governance. Without fixing this identity drift, governing AI agents securely is impossible.

The Eight Cracks in AI agent Identity: Examples & Best Practices

AI agents are operating with deeply flawed identity practices that introduce significant, untraceable risk. These are the critical areas where Identity Drift is occurring, framed by concrete examples and immediate best practices:

| Identity Flaw | Example | Recommended Best Practice (Run-Time Governance) |

|---|---|---|

| 1. Shared Credentials | Imagine a janitor key labeled “ANY CLOSET” that every cleaning robot uses. If one robot is compromised, the attacker has the master key to every closet including the ones in the restricted area. | Dedicated Machine Identity (Client IDs). Issue a unique, non-human client ID/secret pair for every distinct AI agent instance, not just the application. Bind the ID to the agent’s specific container or pod ID for runtime validation. |

| 2. Human IAM Roles | An agent that only needs to read customer feedback posts is given the IAM role of a “DevOps Engineer.” It now has the power to accidentally (or maliciously) delete production cloud resources it should never see. | Micro-Segmentation of Roles. Enforce roles based purely on the API functions the agent uses. If an agent only performs $GET$ operations on a specific database table, its role should reflect only that permission. |

| 3. Long-Lived API Keys | A permanent API key (the equivalent of a house key under the doormat) is used for a quarterly data sync agent. If that key is leaked, it grants uninterrupted access for years, even if the agent is retired. | Runtime Token Exchange. Agents must use a secure secrets vault to request short-lived, expiring access tokens (e.g., 5–60 minutes) immediately prior to execution, preventing perpetual access. |

| 4. Unmapped Service Accounts | An agent uses a service account named svc_ai_01 created five years ago for a forgotten task. This account is never reviewed, has unknown permissions, and is an unmonitored back door for an intruder. | Mandatory Identity Tagging. Require all service accounts used by agents to be tagged with an Owner, Expiration Date, and Business Justification. Integrate these accounts into an automated identity review workflow. |

| 5. Missing On-Behalf-Of (OBO) | A CFO instructs an Agent 42 to approve a $1M payment. The audit log only shows “Agent 42 Approved Payment.” When auditors ask who authorized the agent, there is no record linking the action back to the human. | Token Chaining/Claim Propagation. The initial human user’s token/claim must be programmatically linked (chained) to the agent’s token, ensuring the agent’s action carries the auditable context of the human requestor. |

| 6. Missing Delegated Access | A Microsoft 365 or Google Workspace copilot or a Third Party user facing agent is supposed to take actions on behalf of the user responding to emails, accepting meeting invites, or sharing documents. But instead, the assistant uses its own system identity to perform the action. | Any user-facing copilot or assistant or agent must operate exclusively through a delegated user token, not a standalone agent identity. At runtime, every agent action must cryptographically chain back to the human it represents, ensuring accurate ownership, authorization, and a reliable audit trail across all downstream systems. |

| 7. Tools Borrowing Credentials | An agent uses a general-purpose library to access a database. That library is running in an environment with high-privilege credentials for an HR system it was never supposed to touch. The agent accidentally uses the wrong, over-privileged key. | Isolated Run-Time Environments. Deploy agents in minimal, isolated, and containerized environments (e.g., specific Kubernetes pods) where only the necessary environment variables and secrets are provisioned for its specific task. |

| 8. Untraceable Actions | An unauthorized change occurs in production. The security team sees a log from “System A,” triggered by “Agent B,” instructed by “Service C.” The chain is broken, leaving a confusing trail of breadcrumbs instead of a single security event. | Unified Identity Telemetry. Standardize logging across all systems (cloud, SaaS, agent platform) to capture a consistent Correlation ID that links the initial human request, the agent’s ID, and all subsequent system calls. |

The Path Forward: Reclaiming the Identity Foundation for AI agents

We must enforce a zero-trust identity model for all AI agents. This is an operational imperative, not a nice-to-have.

- Establish Unique, Least-Privileged Identities: Every agent must have its own unique, machine-specific IAM role with permissions scoped strictly to the functions it must perform. The Analogy: Instead of giving your agent a Master Key (a full Human IAM role) that opens every door in the building, provision it with a single-purpose access card that only unlocks the specific storeroom it needs for a specific task.

- Mandate Short-Lived Credentials: All agent access (API keys, tokens) must be short-lived, rotated automatically, and secured via hardened secrets management vaults. The Analogy: You wouldn’t give a temporary house sitter a permanent physical key. Instead, the agent must use a time-sensitive key card that expires every hour and must be dynamically renewed, just like a temporary hotel key. Stolen keys expire quickly, limiting exposure and damage.

- Enforce On-Behalf-Of (OBO): Implement an architectural standard that ensures every agent action logs both the agent’s ID and the specific human user who authorized the task. The Analogy: The agent can no longer act anonymously. Every critical operation must be accompanied by a signed, notarized memo that explicitly states: “I, Agent 42, am performing this action at the express direction of Jane Doe.” This restores accountability, traceability, and auditability that is essential for AI governance.

The identity flaws outlined above aren’t edge cases, they’re structural gaps. And they strike at the heart of what enterprise AI needs most to govern its usage: Traceability, Auditability, and Accountability. To successfully integrate this new digital workforce, IT and security leadership need to act now. The path forward begins with an identity-first design for AI agents, treating identity not as security plumbing but as the foundation of safe, enterprise-grade agency. That means giving every AI agent a unique, least-privileged identity, ensuring their access tokens are short-lived, and always connecting their actions back to the human who authorized the work (OBO or delegated access, depending on the interaction model).

Implementing this identity-first framework is how we move past today’s identity drift and build AI environments that are not only powerful, but secure, accountable, and operationally trustworthy.