A new operational layer is emerging in the enterprise: the Autonomous Core - AI agents that are dynamic, digital decision-makers, assembling workflows and interacting with critical systems and data at run-time. For IT Operations and Cybersecurity professionals, this represents an unprecedented challenge. In order to understand this operational governance gap, it’s essential to look at the limitations of the observability tools currently deployed.

The Dawn of the Autonomous Core

This core consists of AI agents that are not just passive tools, but agents whose behavior, access patterns, tool usage, and cost profiles shift continuously. As they take on real work, they create a new, high-stakes operational governance gap that traditional IT controls simply weren’t designed to address. The question is no longer if an AI agent will make a costly mistake, but how IT can control and govern it in real-time.

The Problem: When “What Happened” Isn’t Enough.

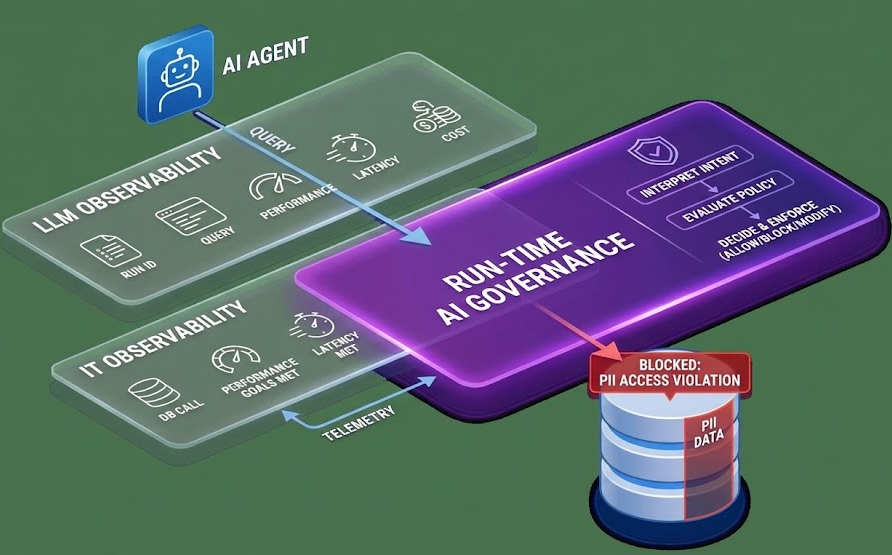

- Traditional IT Observability (e.g., Datadog, New Relic, Dynatrace): These platforms answer What service failed? or What API was called? They provide high-fidelity telemetry on systems and applications, but they describe what happened on the infrastructure level. They lack the context of the AI agent’s intent or the necessary ground truth to judge if the action should have been allowed.

- LLM Observability (e.g., Langfuse, LangSmith): These platforms add new semantic details about AI agent runs, answering What prompts were generated? or What tools did the AI agent call? This layer is also fundamentally descriptive. It tells you what the AI agent did and how it executed, but cannot tell you why the action was allowed, or if it violated a policy.

The Need for Run-time AI Governance

IT Operations and Audit Teams need a central operational governance fabric that moves beyond mere description to enrichment with systems of records to provide Run-time AI Governance. It’s the critical layer that tells you if your AI agent is acting responsibly and in compliance with your policies - and, most importantly, allows you to intervene instantly.

Run-time AI Governance delivers this control by connecting two previously separate elements:

- The AI Agent’s Run-Time Intent: What the agent is trying to do (models → tools → data).

- The CIO’s Policy Ground Truth: Established policies across existing enterprise systems of records such as CMDB, IDP, and data catalogs.

Illustrative Example: Data Access Violation

Consider an AI agent accessing a column in a database table containing PII data:

- IT Observability: Tells you that a database call happened. And the performance and latency goals were met.

- LLM Observability: Tells you which AI agent, which run, and which query were involved. And that the AI agent performance, latency and cost were tracked.

- New Run-time AI Governance: Tells you why it was allowed (or not prevented) - e.g., missing or mis-aligned policy - and proactively prevents the violation in real time.

A run-time AI Governance solution would sit on top of your existing monitoring infrastructure, consuming telemetry from existing IT and/or LLM observability layers, and then:

- Interprets AI agent intent at run-time.

- Evaluates that intent against enterprise policy ground truth.

- Decides whether the action should be allowed, modified, or blocked.

- Enforces controls on access, tools, data, identity, and cost.

Combining Run-time AI Governance with IT & LLM Observability

The most effective approach for governing your Autonomous Core is to integrate the descriptive power of Observability with the prescriptive controls of policies defined in existing systems of records. By combining these solutions, your enterprise gains immediate and actionable operational benefits:

- Gain Full Control: Achieve comprehensive, real-time authority over AI agents’ behavior, access, tools, and data usage that neither platform could offer alone.

- Leverage Existing Investments: Protect and extend the value of your current observability and LLM monitoring stacks by giving their data an enforcement endpoint.

- Ensure Auditability: Produce the necessary accountability, traceability, and audit trails required for regulatory and internal compliance.

- Scale to Production: Move confidently from pilot programs to full production agent deployments with the assurance that all AI actions are aligned with enterprise policy.

Run-time AI Governance gives IT Operations and Audit teams the control they need to safely and confidently scale the Autonomous Core. Without this new solution layer, a lot of manual effort will be spent correlating telemetry data with AI usage (homegrown, 3rd party, and/or embedded in SaaS. If you’re interested in discussing the primary requirements for Run-time AI Governance, reach out to info@langguard.ai