AI agents are rapidly becoming part of the enterprise’s autonomous core - systems that plan, reason, and act across identity, data, tools, models, and infrastructure with little or no human involvement.

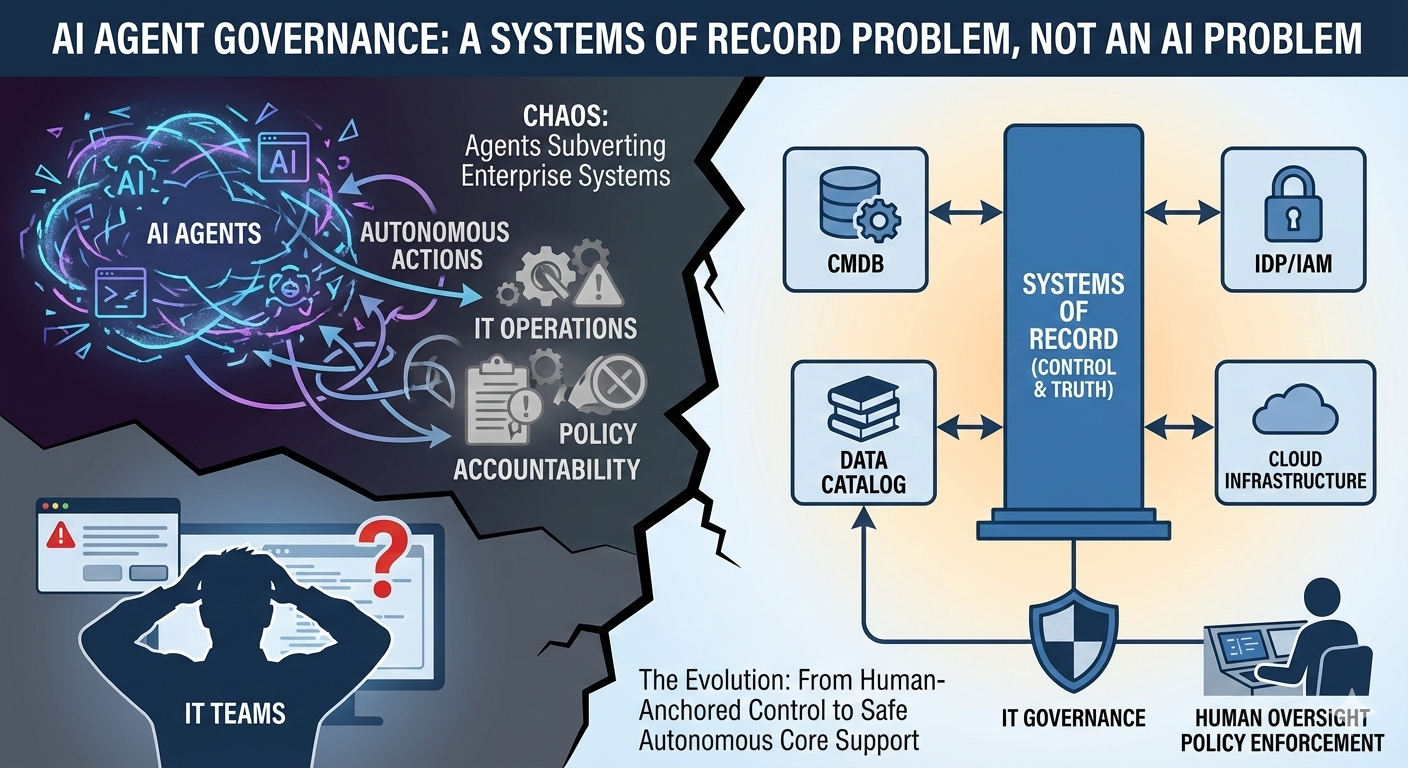

As this shift accelerates, a common narrative has emerged: agent governance is an AI problem. Better models. Better prompts. Better agent frameworks.

That framing is wrong.

Agent governance is a Systems of Record problem.

Systems of Record as Enterprise Ground Truth

For decades, enterprises have operated IT by anchoring decisions, controls, and accountability to ground truth. Systems of Record define what the enterprise believes to be true at any moment - who an identity is, which credentials are valid, what data is sensitive, which tools and models are approved, and which actions are permitted.

Enterprise governance has been built on this foundation. Identity and access flowed from IAM systems. Data ownership and sensitivity were defined in data catalogs. Infrastructure state was governed through cloud control planes. Credential scope was managed through secrets managers. Approved models lived in registries. Budgets, quotas, and limits were enforced through cost controls. Together, these systems established the canonical truth IT relied on.

Humans operationalized that truth. Approvals were explicit. Change was deliberate. When ambiguity existed, humans resolved it. When boundaries were unclear, humans escalated. Governance assumed a human sat at the point of decision - interpreting ground truth before acting on it.

Agents Break the Operating Model - Not the Ground Truth

AI agents don’t invalidate enterprise ground truth. They invalidate the operating model built around humans acting on that truth.

The same Systems of Record still define identity, credentials, data sensitivity, approved tools and models, and cost limits. What has changed is who is acting - and how fast. Agents operate continuously and programmatically across systems, without the pause points IT has always relied on to apply judgment.

This is where governance starts to fail in practice.

Agent Identity

IT teams are used to onboarding users, assigning roles, and reviewing access over time. Agents don’t follow that path. They are spun up dynamically to complete tasks, often without a clear answer to basic questions: is this a unique identity, is it acting on behalf of a human or a system, and what level of privilege should it have?

When identity ground truth isn’t explicit, the agent still executes - leaving IT to reconstruct attribution and accountability after the fact.

Credentials & Secrets

Agents need credentials to operate, and they will use whatever allows them to proceed. In practice, this leads to reused tokens, overly broad scopes, and secrets issued for humans or services being pulled into automated loops.

The failure isn’t the absence of credential systems. It’s that the operating model assumed a human would decide when and how those credentials were used.

Tools & APIs

Agents assemble tools dynamically. A read-only API becomes a write path. An internal tool becomes part of an automated workflow. An integration approved for human use becomes the agent’s default mechanism for change.

From IT’s perspective, nothing is misconfigured - but the boundary between allowed and appropriate has collapsed.

Models

When agents select models at runtime, IT teams face new questions: is the model approved, is it allowed for this data, is it internal or external, and is it appropriate for this task?

Agents don’t pause to ask. They optimize for task completion. If constraints aren’t explicit, agents route around them.

Data Access

Agents pull context aggressively - documents, logs, tickets, configurations, and knowledge bases. If data sensitivity and usage purpose aren’t enforced explicitly, agents cross boundaries humans would have instinctively respected.

What used to be a governance review now happens automatically - and silently.

Cost and Resource Consumption

Agents retry, reason, loop, and explore. Costs grow in ways that don’t map cleanly to projects, teams, or individuals. Budgets may exist, but the operating model assumed humans would self-regulate usage.

Agents don’t. They succeed - even when success is expensive.

Why This Feels So Hard

The Systems of Record still exist. What’s missing is an operating model that assumes machines - not people - are acting on that truth. Agent governance feels hard because the control mechanisms were built for humans - and agents don’t wait.

Systems of Record Must Evolve to Support Agents

Agents do not replace Systems of Record. They force them to evolve.

That evolution requires:

- Explicit, machine-readable definitions of ground truth

- Clear ownership and authority boundaries

- Policies that govern how agents may act

- Constraints enforced at runtime, not after the fact

This shift turns Systems of Record into Systems of Action. Systems of Record define what is true. Systems of Action define what autonomous agents are allowed to do with that truth.

The Real Agent Governance Problem

Agent governance is not about models, prompts, or frameworks. It is about whether enterprise ground truth is explicit, enforceable, and safe for autonomy. Autonomous agents are exposing which Systems of Record were never designed to govern machine-driven execution.

That is the challenge now facing IT and CIOs - and the work that must be done next.