This year, enterprise IT is arguably going to go through the most significant change since the migration from on-premise servers to the cloud. We are moving to a future defined by Agentic AI. These are not merely passive text generators; but are autonomous agents capable of planning, reasoning, accessing corporate data, and executing complex workflows on our behalf. This shift exposes a critical, widening gap in the modern technology stack.

Traditional security tools like firewalls, API gateways, and Identity and Access Management (IAM) systems were designed for deterministic, human-driven interactions. They operate on binary rules: allow or deny. They simply cannot monitor nor govern non-deterministic AI agents that dynamically select tools, query databases, and generate code in real-time based on evolving context.

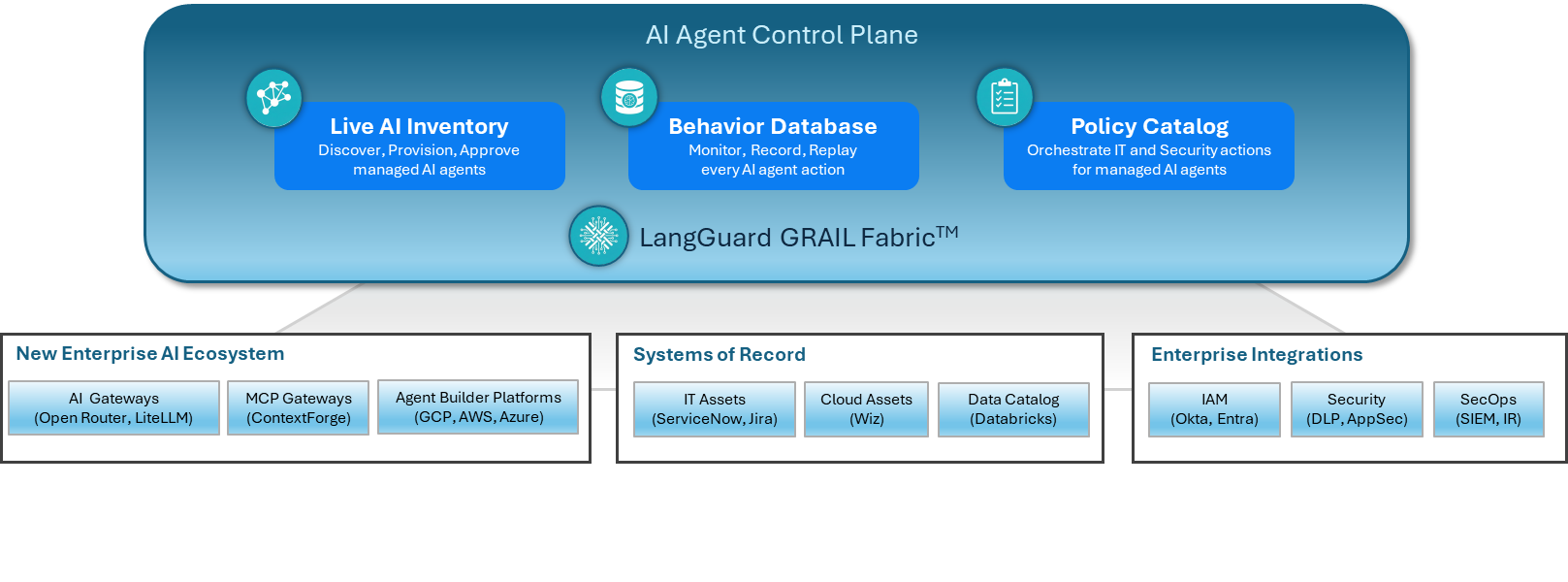

To bridge this, a new layer of essential infrastructure is emerging: the AI Control Plane. An AI Control Plane is not just a security tool. It is a centralized governance layer that sits “above” your AI infrastructure (your models, gateways, and agent platforms), and “below” your business intent. It connects AI execution to the “ground truth” of your enterprise Systems of Record. Instead of bluntly blocking AI activity, a control plane enables it by answering critical questions in real-time: Who is this agent acting as? What data is it allowed to touch? Is its current behavior aligned with our business policy? Who should approve this?

The Business Case: Governance as an Accelerator

For business leaders, the AI Control Plane is not an insurance policy; it is a deployment accelerator. A pervading myth in the industry is that security slows down innovation. In the context of Agentic AI, the opposite is true. Without a control plane, organizations remain stuck in “pilot purgatory,” afraid to let agents run autonomously because they lack the controls to ensure safety. Leading analyst firms have coalesced around the view that governance is the primary bottleneck preventing AI from moving to production. The consensus is clear: you cannot scale what you cannot control.

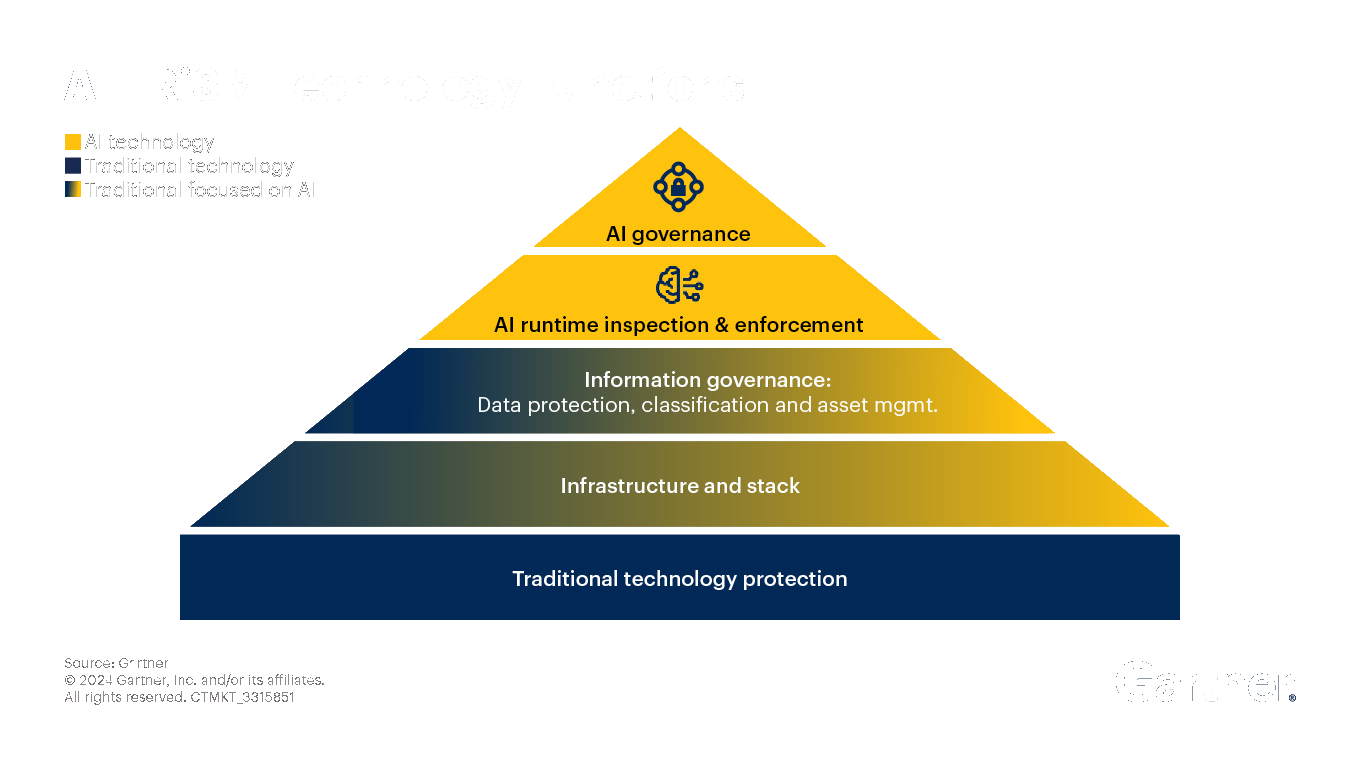

Gartner describes this imperative through their AI TRiSM (Trust, Risk, and Security Management) framework. Their research provides a compelling ROI for governance, predicting that by 2026, organizations that operationalize these principles will see a 50% improvement in adoption rates, business goals, and user acceptance. This isn’t just about compliance; it’s about giving the business the confidence to press the accelerator.

Crucially, Gartner reframes the risk profile for enterprise AI. They estimate that through 2026, 80% of unauthorized AI transactions will stem from internal policy violations—such as information oversharing or misguided AI behavior—rather than malicious external attacks. This highlights that the “enemy” isn’t necessarily a sophisticated hacker; it is often a well-meaning employee or a confused agent accessing data it shouldn’t. A control plane provides the visibility to catch these internal missteps before they become headlines.

From Monitoring to Intervention

While Gartner focuses on the ROI of trust, other firms are defining the architectural necessity of a control layer. As agents from diverse vendors begin to collaborate across ecosystems, enterprises cannot rely solely on the agent platforms themselves for governance. Forrester has formalized this market category as the Agent Control Plane. Analyst Leslie Joseph argues that oversight must live “outside the agent’s execution loop” to ensure monitoring and intervention remain possible even when an agent behaves unpredictably.

They identify three functional planes for the AI stack: the “Build” plane (models and frameworks), the “Embed” plane (workflows), and the “Govern” plane (the control layer). Without this distinct Govern plane, enterprises risk creating a fragmented landscape where every agent has its own unique, incompatible security rules.

IDC echoes this call for robust infrastructure, envisioning a next-generation AI control plane that transcends simple observability. They argue for a new class of operational infrastructure capable of understanding the state of each workflow in real-time and enforcing compliance rules dynamically. This shifts the paradigm from reactive “monitoring” (logging what went wrong yesterday) to proactive “intervention,” allowing IT to pause or redirect rogue agents before damage occurs.

The Shift to Agent Operating Systems

As “Agentic AI” scales, the scrutiny from enterprise boards is shifting from “how do we build agents?” to “how do we govern them?” HFS Research predicts that the lack of such control planes will be a primary pain point, creating demand for AI Control Planes that ensure accountability.

They note that intelligence without governance is chaos. As agents effectively become the new “workforce,” managing their lifecycle and permissions becomes as critical as managing human employees. We are entering a phase where the software itself is the manager, and that manager needs a rigid set of operational principles to ensure the digital workforce adheres to corporate standards.

Analyst Predictions and Frameworks

| Analyst Firm | Key Framework / Concept | Critical Prediction / Insight |

|---|---|---|

| Gartner | AI TRiSM | By 2026, operationalizing TRiSM leads to 50% higher adoption; 80% of failures will be internal policy violations. |

| Forrester | Agent Control Plane | Governance must exist “outside the execution loop” to manage multi-vendor agent ecosystems. |

| IDC | Next-Gen AI Control Plane | Governance requires dynamic policy enforcement and real-time state understanding, not just dashboards. |

| HFS Research | Agent Operating System | The focus will shift from building agents to governing them; “intelligence without governance is chaos”. |

The Data Intelligence Perspective

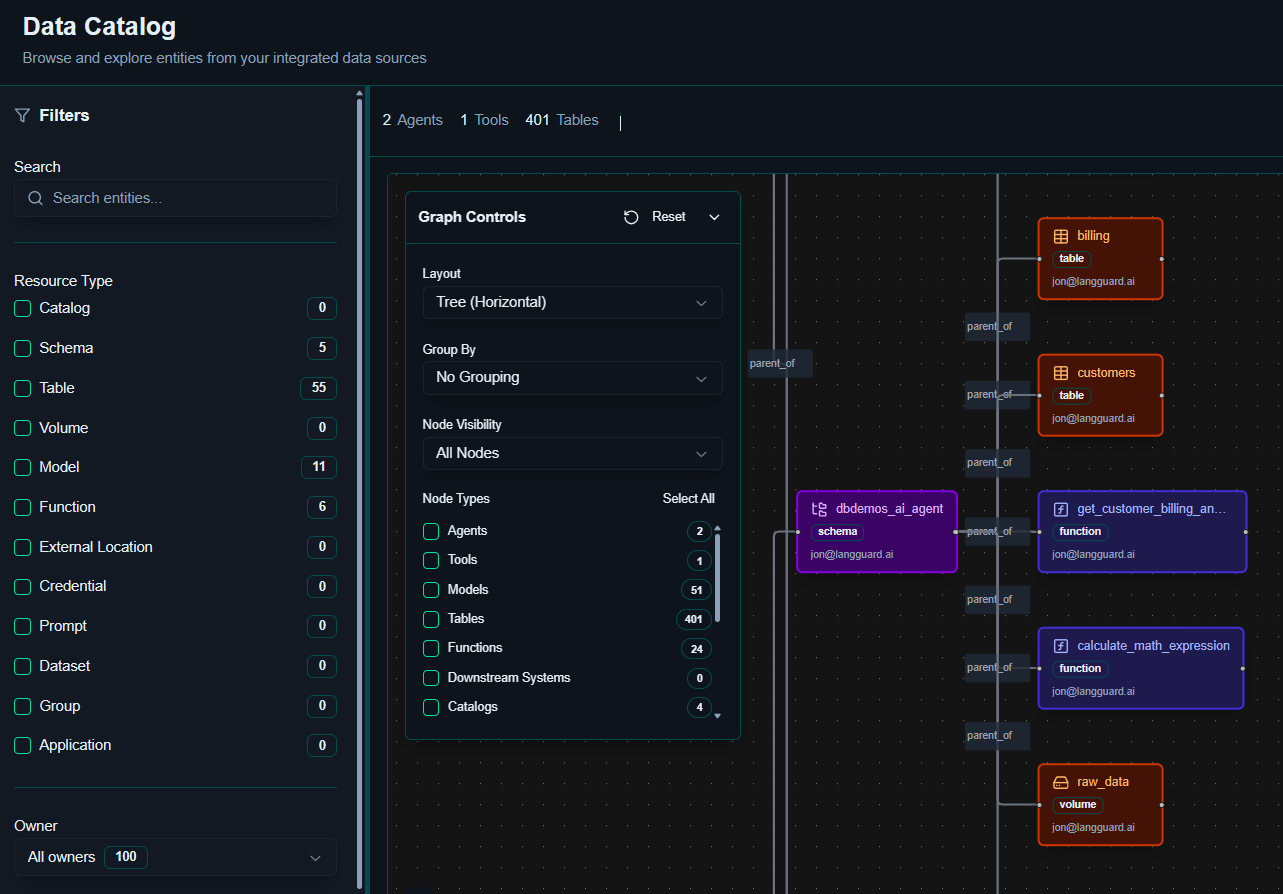

Ali Ghodsi, CEO of Databricks, anchors his view in Data Intelligence. He argues that you cannot govern AI if you don’t govern the data it uses. Ghodsi advocates for a data intelligence playbook where the Unity Catalog serves as the unified governance layer.

In this model, the control plane is inextricably linked to the data lake; agents are only as safe as the data governance policies that constrain them. He emphasizes that owning the “ground truth” of enterprise data is existential for successful AI adoption. If an agent can access ungoverned data, it can hallucinate or leak secrets, rendering the agent useless for enterprise applications.

Solutions Now: The LangGuard Approach

While the analysts and industry giants debate standards and macro-infrastructure, LangGuard is building a purpose-built AI Control Plane for IT and Security teams to use today. Our approach is pragmatic, viewing the control plane not as a barrier, but as a bridge that connects new AI capabilities to existing enterprise “ground truth” in order to help accelerate AI projects out of pilot to produciton.

LangGuard’s platform operationalizes governance through a distinct lifecycle:

-

Discovery & Screening: The system first identifies all AI agents operating in the environment, classifying them as either “Controlled” (managed by IT) or “Uncontrolled” (Shadow AI). It further distinguishes between “Known” agents (identifiable vendors) and “Unknown” entities, allowing IT to immediately revoke access for high-risk shadow agents.

-

Provisioning: Once an agent is approved, LangGuard provisions it with specific, policy-driven credentials. This might include requirements for “On-Behalf-Of” (OBO) authentication or mandatory PII masking, ensuring the agent operates within safe bounds.

-

Monitoring and Governance: The platform provides deep visibility into agent behavior, recording every action and decision for auditability. This turns governance from a one-time “gate” into an ongoing operational discipline.

Conclusion

The “block-by-default” approach that served security teams well in the past is incompatible with the era of Agentic AI. Blocking an agent often breaks the very workflow it was designed to automate, driving users to shadow IT. The AI Control Plane offers a sophisticated alternative: a way to govern intent, enforce policy dynamically, and provide the evidence needed to trust autonomous systems.

As enterprises move from piloting simple chatbots to deploying agents that plan, reason, and execute real work, this control layer is becoming the most critical piece of infrastructure in the modern IT stack. It is the missing link that allows organizations to embrace the speed of AI without sacrificing the safety of the enterprise.